About:

A simple definition: "Artificial intelligence is a machine’s ability to perform the cognitive functions we usually associate with human minds."

See YouTube explainers on concepts and AI models.

and What is AI? | McKinsey-Explainers

AI started in the 50's with simple examples like checker playing, rudimentary language translation.

The term Artificial Intelligence was coined at a Dartmouth College summer AI conference in 1956.

See history below.

AI can be classified as:

- Narrow AI - Designed for a specific purpose. e.g. medical diagnosis, Digital smartphone assistants, Self-driving cars, ...

- General AI e.g. HAL, the computer in 2001: A space odyssey (1968), Intelligence powering R2-D2 in "Star Wars", ...

Most experts say General AI is 10 years away

- Super AI Super AI may use the human brain as a model in order to understand and interpret human emotions and experiences. I would also add the ability to teach itself new things, Similar to what Jean Piaget (1896-1980) a famous psychologist, studied in respect to the understanding of child development of knowledge.

Some general uses:

Smarter Assistants Enhanced Personalization Creative Expression Enhanced Healthcare Automation Revolution Machine Learning Mastery natural Language Processing Deep Learning Autonomous VehiclesSee Artificial Intelligence: Last Week Tonight with John Oliver (HBO)

3 popular chatbots are user apps on the internet:

OpenAI's ChatGPT, Microsoft Bing and Bard.google.com are referred to ChatBots that became popular internet apps in 2023.

(AI language models are, in part, defined by their unknown skills a quality dubbed capability overhang in the AI community).

Discover the wonder of AI-powered search.- examples | Microsoft

Chatbots generate reports on a variety of subjects that look like they are written by humans. There is concern that students will let chatbots analyze their writing assignments.

Pete Lozzi asked ChatGPT to compare a friends resume to a job description. It identified matches that helped in their interview.

A textbook author Gave ChatGPT the first 12 chapters and asked it to write a 13th chapter. It did a good job.

some examples:

summarize "War and Peace" in 1 paragraph

"Generate a congratulation letter for a graduate who was a scholar and altelete."

"Give me a history of Apple."

Write computer code

See how chatbots analyzed this web site.

Stanford Daily Study in January 2023 stated that

"around 17% of Stanford student respondents reported using ChatGPT to assist with their fall quarter assignments and exams.

Of those 17%, a majority reported using the AI only for brainstorming and outlining. Only about 5% reported having submitted written material directly from ChatGPT with little to no edits, according to the poll."

Other things you can ask ChatGPT.

"Sumarize War & peace in 1 paragraph."

These Chatbots have a tremendous volume of data to draw upon.

According to a 2023 article in The Atlantic, Google Books estimate that since Gutenberg, humans have published more than 125 million titles, collecting laws, poems, myths, essays, histories, treatises, and novels. An Epoch AI team estimates that 10 million to 30 million of these books have already been digitized.

Researchers at Google Books estimate that since Gutenberg, humans have published more than 125 million titles, collecting laws, poems, myths, essays, histories, treatises, and novels.

Other current applications:

ElevenLabs - Prime Voice AI Text to Speech | Voice Cloning

Image generation: DALL-E is an image generator from OpenAI.

e.g. an image of a space shuttle flying above New York City.

Edit photos:

Topaz Photo AI

Luminar Neo

inPixio

When computers came into general use in the 1960s. There was a lot of speculation that they would become smarter than humans in 10 years.

In the movie, 2001: A Space Odyssey, 1968, a computer "HAL" (Take each letter before "IBM" and you get "HAL") takes over the space ship after the crew thinks it made a mistake and tries to turn it off. HAL controls most of the functions on the ship and used natural language to communicate with the crew.

There was fear that in 30 years this could actually happen.

But there were a lot of good examples for practical uses of AI , speech recognition, language translation, ...

ELIZA an early natural language processing computer program created from 1964 to 1966 at MIT by Joseph Weizenbaum, simulated a psychotherapist. It was one of the first ChatBots. When it was put on Tandy/Radio Shack computers in the 70's, users found it quite engaging.

The example that I like to give (I doubt if it is real, but is representative of some of the problems). A test of success for translation was to translate a phrase from one language to another and then translate it back and see if you got the same thing.

A language translation program was written to go between English and Russian. The phrase "The spirit is willing but the flesh is weak." was translated to Russian.

It came back as "The vodka is good but the meat is rotten."

It soon became apparent that the human brain was orders of magnitude more complex than any computing technology.

The idea of an intelligence explosion was revived in 1993, by the author and computer scientist Vernor Vinge, who called it "the singularity".

See Why Computers Wont Make Themselves Smarter | Ted Chiang, NewYorker, 2021

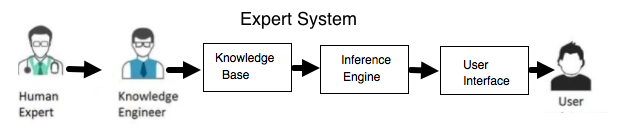

In the 1980s there was a lot of work on expert systems, a computer system that emulates the decision-making ability of a real human expert.

They were generally rule based systems based on if-then rules.

Forward chaining follows an if-then tree to a conclusion.

Backward chaining starts with a list of goals (or a hypothesis) and works backwards from the consequent to the antecedent to see if there is data available that will support any of these consequents.

There were difficulties with these systems, because subject matter experts who had been doing a job for decades frequently can't explain how they make decisions.

An example is my cousin who just graduated from medical school went into practice with a doctor who had been practicing medicine for more than 30 years.

He would see someone walk in the door and say, "he has appendicitis". He was usually right but couldn't explain how he did it.

See: Rule Based Expert Systems

in the 2010's there were advances in Deep Learning [(Neural Networks (Software to work like the brain with artificial neurons), which learn (progressively improve performance) to do tasks by considering examples] is becoming more practical.

This started the speculation about computers taking over humankind again.

See Deep Learning | MIT Technology Review, 2013

Deep learning is being used among other things to develop self-driving cars.

See Deep Learning for Self-driving Cars | princeton.edu

A problem with deep learning systems is you can't tell how they reached their conclusions. The neural networks cannot be reverse engineered. Trusting these systems requires a leap of faith.

Starting in the summer of 2018, the European Union may require that companies be able to give users an explanation for decisions that automated systems reach.

See The Dark Secret at the Heart of AI - MIT Technology Review.

But, in 2023 we are still a long way from a computer program like HAL.

History:

The following history in OpenAI's chat page is good. It requires you to create an account.

The following is a list I created around 2021.

Links:

AI History Overview | OpenAI

Click on History on the left column.

The Term Artificial Intelligence coined.

The conference marked the birth of AI as a distinct field of study.

They were of limited success, because experts in many fields had some innate ability to analyze situations which they could not explain sufficiently to automate it.

See Deep Learning - MIT Technology Review

Cognitive Computing

and The Difference Between AI, Machine Learning, and Deep Learning? | NVIDIA Blog

Ethics and responsible AI e.g. Multi-racial training for AI knowledge bases.

Timeline of artificial intelligence - Wikipedia

A beginner's guide to artificial intelligence, machine learning, and cognitive computing

European Union regulations on algorithmic decision-making and a "right to explanation" | 2016 ICML Workshop on Human Interpretability in Machine Learning (WHI 2016)

Deep Learning | MIT Technology Review, Apr., 2017

The Dark Secret at the Heart of AI - MIT Technology Review

The Great A.I. Awakening - The New York Times, Dec, 2016

Return to computers